Developers can now experiment with multimodal controls in the Meta Quest v62 SDK, which includes Capsense (Hybrid Hands) and Wide Motion Mode (WMM)

The latest release, version v62, of the Meta XR Core SDK has recently been published, introducing three significant additions for developers to integrate into their apps. These new features are also showcased in the Meta Interaction SDK Samples demo app, now available in the Meta app store for all to explore.

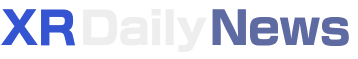

The Meta Quest v62 SDK now includes Simultaneous Hands and Controller tracking, a feature initially introduced in 2023 with v56 as an experimental version but is now integrated into the official Meta XR Core SDK. This functionality enables users to simultaneously utilize hand tracking and controller tracking, allowing for scenarios where one hand remains free while the other holds a controller.

The second new feature is Capsense, which enables developers to display a natural hand model visualization either on top of or instead of a user’s controller. Both Multimodal and Capsense functionalities can be observed in the video below.

The third new feature, Wide Motion Mode (WMM), enables developers to track hands and display plausible hand poses even when they are outside the headset’s field of view, enhancing social presence and more. Read more about each feature below.

Table of Contents

Simultaneous Hands and Controllers (Multimodal)

As mentioned earlier, Multimodal enables simultaneous tracking of both hands and controllers. This feature is exclusive to Quest Pro and Quest 3 controllers, meaning it is available for Quest 2 users only if they have Touch Pro controllers.

From the Multimodal documentation, we can learn, among other things, that:

- Users can wield a controller in one hand as (for instance) a weapon, benefiting from its high accuracy and haptics, while keeping the other hand free for actions like casting spells. This opens up vast possibilities for developers to creatively implement this feature.

- Single-controller gameplay is supported. This can benefit games like Eleven Table Tennis, where one controller serves as a paddle while the other hand manages ball throws. Multimodal features enhance user comfort in such scenarios.

- We now experience instant transitions between hands and controllers. Hand tracking remains active, allowing the headset to swiftly detect when controllers are no longer held. This resolves issues of slow or failed automatic transitions. Users are no longer required to frequently manually switch between controller and hand tracking, such as by tapping controllers together twice.

Learn more about Multimodal here.

Capsense (Hybrid Hands)

Capsense, previously introduced in 2023 with v56 as an Experimental feature, is now officially released and freely available for developers to utilize. Its function involves leveraging tracked controller data to offer a standardized range of hand animations and poses, allowing for:

- Natural hand poses, simulate interactions as if the user were not using a controller and engaging naturally with their hand.

- hand poses for controllers, designed to be displayed alongside the controller itself, offering various shapes depending on different controller types.

Capsense currently supports controllers for Quest 2, Quest 3, and Quest Pro. Learn more about Capsense here.

Wide Motion Mode (WMM) on Quest 3 – Bigger Hand Tracking FOV

Wide Motion Mode (WMM) is an intriguing feature, currently exclusive to Quest 3. However, Meta claims that it will extend to future Quest devices beyond Quest 3, hinting at its inclusion in the upcoming Meta Quest 3 Lite. This suggests a similar camera setup for the Lite version compared to Quest 3, as Wide Motion Mode relies on Inside Out Body Tracking (IOBT), a feature only present in Quest 3 due to its down-facing cameras.

WMM allows the headset to track users’ hands and display plausible hand poses even when the hands are outside the headset’s camera FOV. This is of course only an estimation of your real hands’ position, but, according to Meta, it can bring benefits like:

- Enhanced social interaction with believable hand gestures, even when hands are beyond the field of view.

- Better performance in wide-motion activities like arm swings, throwing objects, and more.

- Decreased chances of interaction disruptions when users turn their heads.

- Potential for innovative gameplay mechanics like arm swing locomotion, akin to Echo VR (although this game doesn’t exist right now).

For further details on Wide Motion Mode and integrating it into your projects, refer to the documentation provided here.

Combining all these features could greatly enhance the hand-tracking experience for users in both apps and games, however, currently, it is not possible. There is a restriction for developers where they cannot use Wide Motion Mode (WMM) with Multimodal simultaneously, so it is up to devs which feature suits their app better. Our note here: Meta should consider making multimodal default or at least accessible in the main interface. This would make tasks like scrolling or navigating the UI much smoother for users.

Moreover, Hand tracking enhances social experiences as gestures are integral to communication. Enabling Wide Motion Mode in social games like VRChat would be highly beneficial, reducing awkwardness when hands move out of the headset’s field of view and return to their default position at the side of the body, among other problems.

When you linking to Meta try to remove localization embendments. As the links applying polish language to website. Thanks!

Thanks for pointing this out!